The transport layer¶

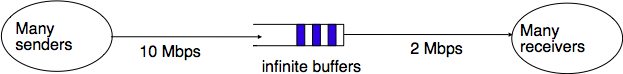

As the transport layer is built on top of the network layer, it is important to know the key features of the network layer service. There are two types of network layer services : connectionless and connection-oriented. The connectionless network layer service is the most widespread. Its main characteristics are :

- the connectionless network layer service can only transfer SDUs of limited size [1]

- the connectionless network layer service may discard SDUs

- the connectionless network layer service may corrupt SDUs

- the connectionless network layer service may delay, reorder or even duplicate SDUs

These imperfections of the connectionless network layer service will become much clearer once we have explained the network layer in the next chapter. At this point, let us simply assume that these imperfections occur without trying to understand why they occur.

Some transport protocols can be used on top of a connection-oriented network service, such as class 0 of the ISO Transport Protocol (TP0) defined in [X224] , but they have not been widely used. We do not discuss in further detail such utilisation of a connection-oriented network service in this book.

This chapter is organised as follows. We will first explain how it is possible to provide a reliable transport service on top of an unreliable connectionless network service. For this, we explain the main mechanisms found in such protocols. Then, we will study in detail the two transport protocols that are the most commonly used in the Internet. We begin with the User Datagram Protocol (UDP) which provides a simple connectionless transport service. Then, we will describe in detail the Transmission Control Protocol (TCP), including its congestion control mechanism.

Footnotes

| [1] | Many network layer services are unable to carry SDUs that are larger than 64 KBytes. |

Principles of a reliable transport protocol¶

In this section, we depict a reliable transport protocol running above a connectionless network layer service. For this, we first assume that the network layer provides a perfect service, i.e. :

- the connectionless network layer service never corrupts SDUs

- the connectionless network layer service never discards SDUs

- the connectionless network layer service never delays, reorders nor duplicate SDUs

- the connectionless network layer service can support SDUs of any size

We will then remove each of these assumptions one after the other in order to better understand the mechanisms used to solve each imperfection.

Reliable data transfer on top of a perfect network service¶

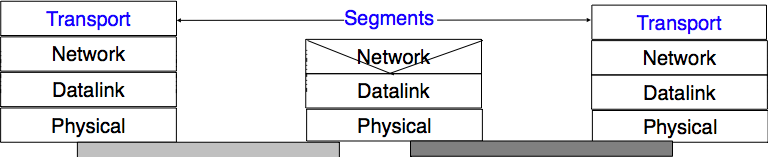

The transport layer entity interacts with both a user in the application layer and an entity in the network layer. According to the reference model, these interactions will be performed using DATA.req and DATA.ind primitives. However, to simplify the presentation and to avoid confusion between a DATA.req primitive issued by the user of the transport layer entity, and a DATA.req issued by the transport layer entity itself, we will use the following terminology :

- the interactions between the user and the transport layer entity are represented by using the classical DATA.req, DATA.ind primitives

- the interactions between the transport layer entity and the network layer service are represented by using send instead of DATA.req and recvd instead of DATA.ind

This is illustrated in the figure below.

When running on top of a perfect connectionless network service, a transport level entity can simply issue a send(SDU) upon arrival of a DATA.req(SDU). Similarly, the receiver issues a DATA.ind(SDU) upon receipt of a recvd(SDU). Such a simple protocol is sufficient when a single SDU is sent.

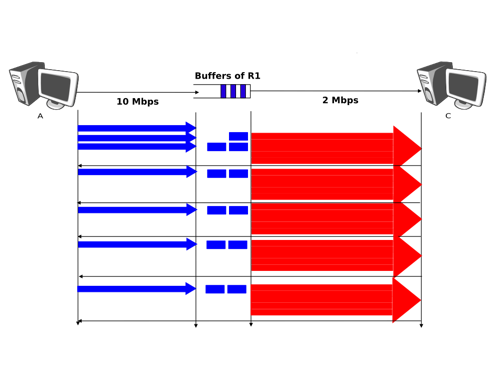

Unfortunately, this is not always sufficient to ensure a reliable delivery of the SDUs. Consider the case where a client sends tens of SDUs to a server. If the server is faster that the client, it will be able to receive and process all the segments sent by the client and deliver their content to its user. However, if the server is slower than the client, problems may arise. The transport layer entity contains buffers to store SDUs that have been received as a Data.request from the application but have not yet been sent via the network service. If the application is faster than the network layer, the buffer becomes full and the operating system suspends the application to let the transport entity empty its transmission queue. The transport entity also uses a buffer to store the segments received from the network layer that have not yet been processed by the application. If the application is slow to process the data, this buffer becomes full and the transport entity is not able to accept anymore the segments from the network layer. The buffers of the transport entity have a limited size [2] and if they overflow, the transport entity is forced to discard received segments.

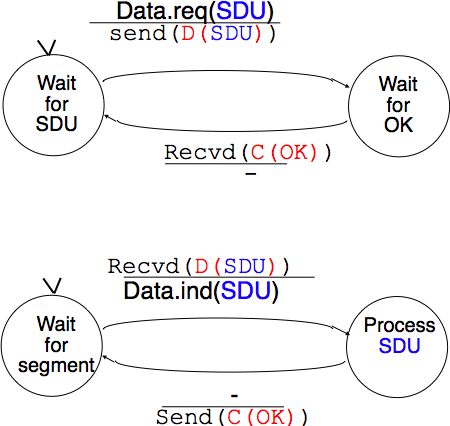

To solve this problem, our transport protocol must include a feedback mechanism that allows the receiver to inform the sender that it has processed a segment and that another one can be sent. This feedback is required even though the network layer provides a perfect service. To include such a feedback, our transport protocol must process two types of segments :

- data segments carrying a SDU

- control segments carrying an acknowledgment indicating that the previous segment was processed correctly

These two types of segments can be distinguished using a segment composed of two parts :

- the header that contains one bit set to 0 in data segments and set to 1 in control segments

- the payload that contains the SDU supplied by the user application

The transport entity can then be modelled as a finite state machine, containing two states for the receiver and two states for the sender. The figure below provides a graphical representation of this state machine with the sender above and the receiver below.

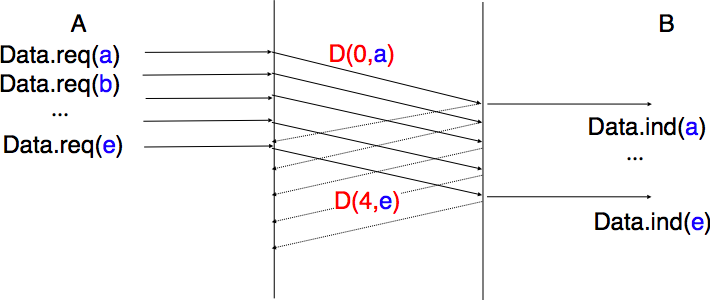

The above FSM shows that the sender has to wait for an acknowledgement from the receiver before being able to transmit the next SDU. The figure below illustrates the exchange of a few segments between two hosts.

Reliable data transfer on top of an imperfect network service¶

The transport layer must deal with the imperfections of the network layer service. There are three types of imperfections that must be considered by the transport layer :

- Segments can be corrupted by transmission errors

- Segments can be lost

- Segments can be reordered or duplicated

To deal with these types of imperfections, transport protocols rely on different types of mechanisms. The first problem is transmission errors. The segments sent by a transport entity is processed by the network and datalink layers and finally transmitted by the physical layer. All of these layers are imperfect. For example, the physical layer may be affected by different types of errors :

- random isolated errors where the value of a single bit has been modified due to a transmission error

- random burst errors where the values of n consecutive bits have been changed due to transmission errors

- random bit creations and random bit removals where bits have been added or removed due to transmission errors

The only solution to protect against transmission errors is to add redundancy to the segments that are sent. Information Theory defines two mechanisms that can be used to transmit information over a transmission channel affected by random errors. These two mechanisms add redundancy to the information sent, to allow the receiver to detect or sometimes even correct transmission errors. A detailed discussion of these mechanisms is outside the scope of this chapter, but it is useful to consider a simple mechanism to understand its operation and its limitations.

Information theory defines coding schemes. There are different types of coding schemes, but let us focus on coding schemes that operate on binary strings. A coding scheme is a function that maps information encoded as a string of m bits into a string of n bits. The simplest coding scheme is the even parity coding. This coding scheme takes an m bits source string and produces an m+1 bits coded string where the first m bits of the coded string are the bits of the source string and the last bit of the coded string is chosen such that the coded string will always contain an even number of bits set to 1. For example :

- 1001 is encoded as 10010

- 1101 is encoded as 11011

This parity scheme has been used in some RAMs as well as to encode characters sent over a serial line. It is easy to show that this coding scheme allows the receiver to detect a single transmission error, but it cannot correct it. However, if two or more bits are in error, the receiver may not always be able to detect the error.

Some coding schemes allow the receiver to correct some transmission errors. For example, consider the coding scheme that encodes each source bit as follows :

- 1 is encoded as 111

- 0 is encoded as 000

For example, consider a sender that sends 111. If there is one bit in error, the receiver could receive 011 or 101 or 110. In these three cases, the receiver will decode the received bit pattern as a 1 since it contains a majority of bits set to 1. If there are two bits in error, the receiver will not be able anymore to recover from the transmission error.

This simple coding scheme forces the sender to transmit three bits for each source bit. However, it allows the receiver to correct single bit errors. More advanced coding systems that allow to recover from errors are used in several types of physical layers.

Transport protocols use error detection schemes, but none of the widely used transport protocols rely on error correction schemes. To detect errors, a segment is usually divided into two parts :

- a header that contains the fields used by the transport protocol to ensure reliable delivery. The header contains a checksum or Cyclical Redundancy Check (CRC) [Williams1993] that is used to detect transmission errors

- a payload that contains the user data passed by the application layer.

Some segment headers also include a length , which indicates the total length of the segment or the length of the payload.

The simplest error detection scheme is the checksum. A checksum is basically an arithmetic sum of all the bytes that a segment is composed of. There are different types of checksums. For example, an eight bit checksum can be computed as the arithmetic sum of all the bytes of (both the header and trailer of) the segment. The checksum is computed by the sender before sending the segment and the receiver verifies the checksum upon reception of each segment. The receiver discards segments received with an invalid checksum. Checksums can be easily implemented in software, but their error detection capabilities are limited. Cyclical Redundancy Checks (CRC) have better error detection capabilities [SGP98], but require more CPU when implemented in software.

Note

Checksums, CRCs, ...

Most of the protocols in the TCP/IP protocol suite rely on the simple Internet checksum in order to verify that the received segment has not been affected by transmission errors. Despite its popularity and ease of implementation, the Internet checksum is not the only available checksum mechanism. Cyclical Redundancy Checks (CRC) are very powerful error detection schemes that are used notably on disks, by many datalink layer protocols and file formats such as zip or png. They can easily be implemented efficiently in hardware and have better error-detection capabilities than the Internet checksum [SGP98] . However, when the first transport protocols were designed, CRCs were considered to be too CPU-intensive for software implementations and other checksum mechanisms were used instead. The TCP/IP community chose the Internet checksum, the OSI community chose the Fletcher checksum [Sklower89] . Now, there are efficient techniques to quickly compute CRCs in software [Feldmeier95] , the SCTP protocol initially chose the Adler-32 checksum but replaced it recently with a CRC (see RFC 3309).

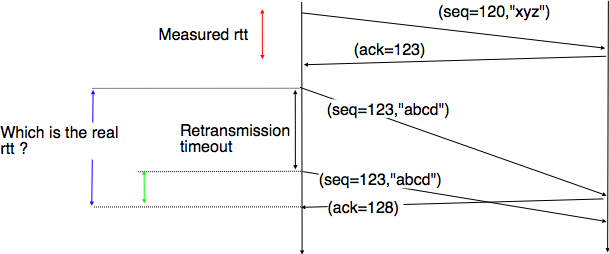

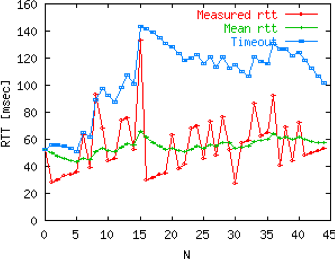

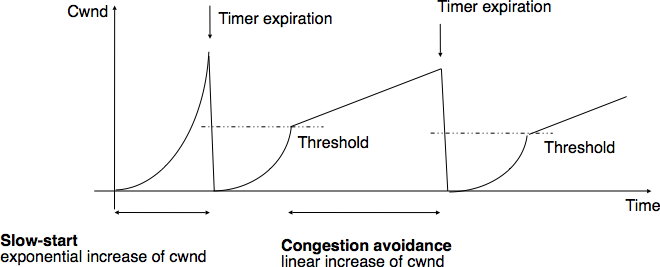

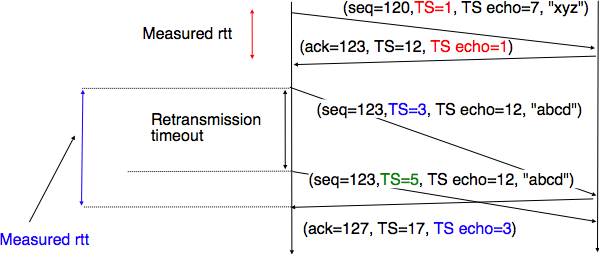

The second imperfection of the network layer is that segments may be lost. As we will see later, the main cause of packet losses in the network layer is the lack of buffers in intermediate routers. Since the receiver sends an acknowledgement segment after having received each data segment, the simplest solution to deal with losses is to use a retransmission timer. When the sender sends a segment, it starts a retransmission timer. The value of this retransmission timer should be larger than the round-trip-time, i.e. the delay between the transmission of a data segment and the reception of the corresponding acknowledgement. When the retransmission timer expires, the sender assumes that the data segment has been lost and retransmits it. This is illustrated in the figure below.

Unfortunately, retransmission timers alone are not sufficient to recover from segment losses. Let us consider, as an example, the situation depicted below where an acknowledgement is lost. In this case, the sender retransmits the data segment that has not been acknowledged. Unfortunately, as illustrated in the figure below, the receiver considers the retransmission as a new segment whose payload must be delivered to its user.

To solve this problem, transport protocols associate a sequence number to each data segment. This sequence number is one of the fields found in the header of data segments. We use the notation D(S,...) to indicate a data segment whose sequence number field is set to S. The acknowledgements also contain a sequence number indicating the data segments that it is acknowledging. We use OKS to indicate an acknowledgement segment that confirms the reception of D(S,...). The sequence number is encoded as a bit string of fixed length. The simplest transport protocol is the Alternating Bit Protocol (ABP).

The Alternating Bit Protocol uses a single bit to encode the sequence number. It can be implemented easily. The sender and the receivers only require a four states Finite State Machine.

The initial state of the sender is Wait for D(0,...). In this state, the sender waits for a Data.request. The first data segment that it sends uses sequence number 0. After having sent this segment, the sender waits for an OK0 acknowledgement. A segment is retransmitted upon expiration of the retransmission timer or if an acknowledgement with an incorrect sequence number has been received.

The receiver first waits for D(0,...). If the segment contains a correct CRC, it passes the SDU to its user and sends OK0. If the segment contains an invalid CRC, it is immediately discarded. Then, the receiver waits for D(1,...). In this state, it may receive a duplicate D(0,...) or a data segment with an invalid CRC. In both cases, it returns an OK0 segment to allow the sender to recover from the possible loss of the previous OK0 segment.

Note

Dealing with corrupted segments

The receiver FSM of the Alternating bit protocol discards all segments that contain an invalid CRC. This is the safest approach since the received segment can be completely different from the segment sent by the remote host. A receiver should not attempt at extracting information from a corrupted segment because it cannot know which portion of the segment has been affected by the error.

The figure below illustrates the operation of the alternating bit protocol.

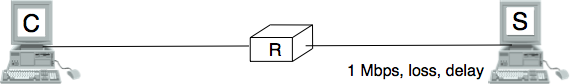

The Alternating Bit Protocol can recover from transmission errors and segment losses. However, it has one important drawback. Consider two hosts that are directly connected by a 50 Kbits/sec satellite link that has a 250 milliseconds propagation delay. If these hosts send 1000 bits segments, then the maximum throughput that can be achieved by the alternating bit protocol is one segment every  milliseconds if we ignore the transmission time of the acknowledgement. This is less than 2 Kbits/sec !

milliseconds if we ignore the transmission time of the acknowledgement. This is less than 2 Kbits/sec !

Go-back-n and selective repeat¶

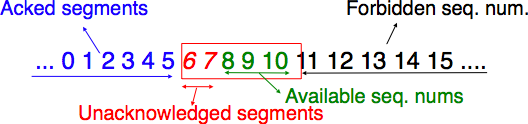

To overcome the performance limitations of the alternating bit protocol, transport protocols rely on pipelining. This technique allows a sender to transmit several consecutive segments without being forced to wait for an acknowledgement after each segment. Each data segment contains a sequence number encoded in an n bits field.

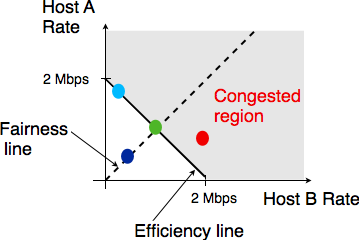

Pipelining allows the sender to transmit segments at a higher rate, but we need to ensure that the receiver does not become overloaded. Otherwise, the segments sent by the sender are not correctly received by the destination. The transport protocols that rely on pipelining allow the sender to transmit W unacknowledged segments before being forced to wait for an acknowledgement from the receiving entity.

This is implemented by using a sliding window. The sliding window is the set of consecutive sequence numbers that the sender can use when transmitting segments without being forced to wait for an acknowledgement. The figure below shows a sliding window containing five segments (6,7,8,9 and 10). Two of these sequence numbers (6 and 7) have been used to send segments and only three sequence numbers (8, 9 and 10) remain in the sliding window. The sliding window is said to be closed once all sequence numbers contained in the sliding window have been used.

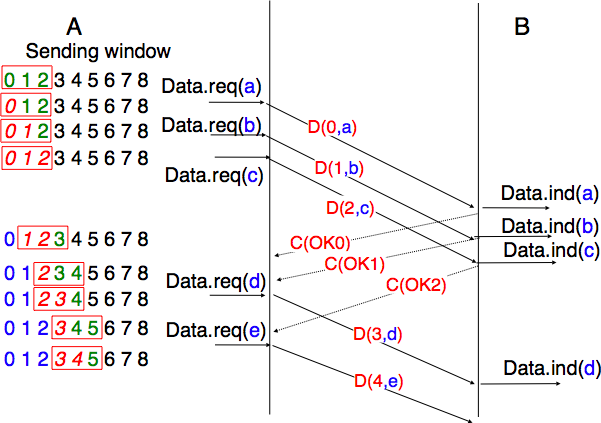

The figure below illustrates the operation of the sliding window. The sliding window shown contains three segments. The sender can thus transmit three segments before being forced to wait for an acknowledgement. The sliding window moves to the higher sequence numbers upon reception of acknowledgements. When the first acknowledgement (OK0) is received, it allows the sender to move its sliding window to the right and sequence number 3 becomes available. This sequence number is used later to transmit SDU d.

In practice, as the segment header encodes the sequence number in an n bits string, only the sequence numbers between  and

and  can be used. This implies that the same sequence number is used for different segments and that the sliding window will wrap. This is illustrated in the figure below assuming that 2 bits are used to encode the sequence number in the segment header. Note that upon reception of OK1, the sender slides its window and can use sequence number 0 again.

can be used. This implies that the same sequence number is used for different segments and that the sliding window will wrap. This is illustrated in the figure below assuming that 2 bits are used to encode the sequence number in the segment header. Note that upon reception of OK1, the sender slides its window and can use sequence number 0 again.

Unfortunately, segment losses do not disappear because a transport protocol is using a sliding window. To recover from segment losses, a sliding window protocol must define :

- a heuristic to detect segment losses

- a retransmission strategy to retransmit the lost segments.

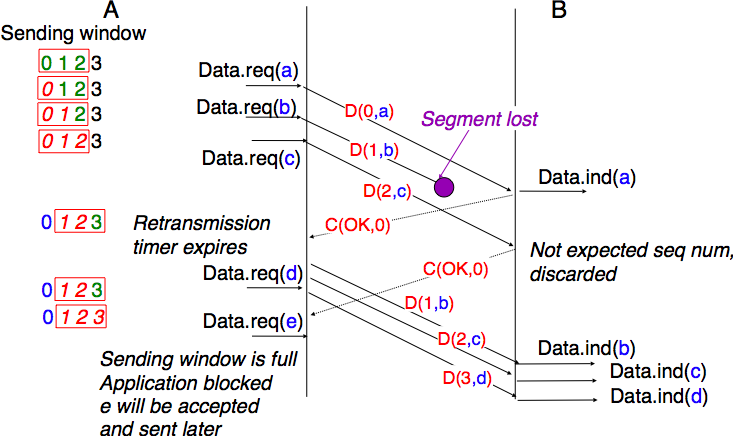

The simplest sliding window protocol uses go-back-n recovery. Intuitively, go-back-n operates as follows. A go-back-n receiver is as simple as possible. It only accepts the segments that arrive in-sequence. A go-back-n receiver discards any out-of-sequence segment that it receives. When go-back-n receives a data segment, it always returns an acknowledgement containing the sequence number of the last in-sequence segment that it has received. This acknowledgement is said to be cumulative. When a go-back-n receiver sends an acknowledgement for sequence number x, it implicitly acknowledges the reception of all segments whose sequence number is earlier than x. A key advantage of these cumulative acknowledgements is that it is easy to recover from the loss of an acknowledgement. Consider for example a go-back-n receiver that received segments 1, 2 and 3. It sent OK1, OK2 and OK3. Unfortunately, OK1 and OK2 were lost. Thanks to the cumulative acknowledgements, when the receiver receives OK3, it knows that all three segments have been correctly received.

The figure below shows the FSM of a simple go-back-n receiver. This receiver uses two variables : lastack and next. next is the next expected sequence number and lastack the sequence number of the last data segment that has been acknowledged. The receiver only accepts the segments that are received in sequence. maxseq is the number of different sequence numbers ( ).

).

A go-back-n sender is also very simple. It uses a sending buffer that can store an entire sliding window of segments [3] . The segments are sent with increasing sequence number (modulo maxseq). The sender must wait for an acknowledgement once its sending buffer is full. When a go-back-n sender receives an acknowledgement, it removes from the sending buffer all the acknowledged segments and uses a retransmission timer to detect segment losses. A simple go-back-n sender maintains one retransmission timer per connection. This timer is started when the first segment is sent. When the go-back-n sender receives an acknowledgement, it restarts the retransmission timer only if there are still unacknowledged segments in its sending buffer. When the retransmission timer expires, the go-back-n sender assumes that all the unacknowledged segments currently stored in its sending buffer have been lost. It thus retransmits all the unacknowledged segments in the buffer and restarts its retransmission timer.

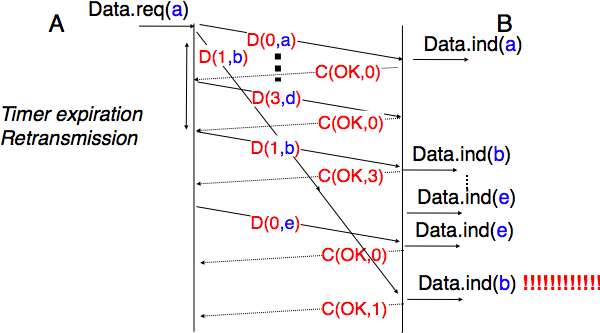

The operation of go-back-n is illustrated in the figure below. In this figure, note that upon reception of the out-of-sequence segment D(2,c), the receiver returns a cumulative acknowledgement C(OK,0) that acknowledges all the segments that have been received in sequence. The lost segment is retransmitted upon the expiration of the retransmission timer.

The main advantage of go-back-n is that it can be easily implemented, and it can also provide good performance when only a few segments are lost. However, when there are many losses, the performance of go-back-n quickly drops for two reasons :

- the go-back-n receiver does not accept out-of-sequence segments

- the go-back-n sender retransmits all unacknowledged segments once its has detected a loss

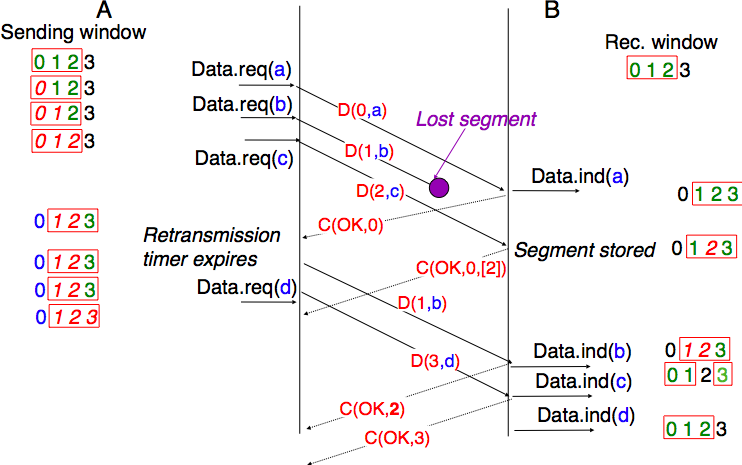

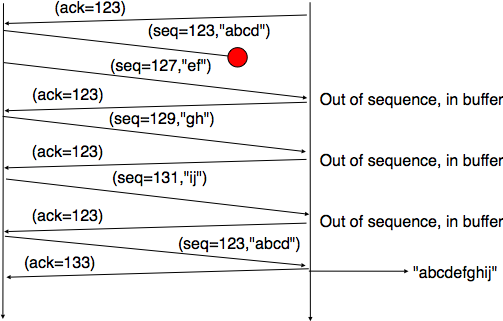

Selective repeat is a better strategy to recover from segment losses. Intuitively, selective repeat allows the receiver to accept out-of-sequence segments. Furthermore, when a selective repeat sender detects losses, it only retransmits the segments that have been lost and not the segments that have already been correctly received.

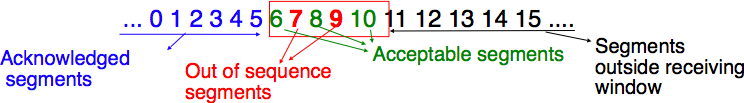

A selective repeat receiver maintains a sliding window of W segments and stores in a buffer the out-of-sequence segments that it receives. The figure below shows a five segment receive window on a receiver that has already received segments 7 and 9.

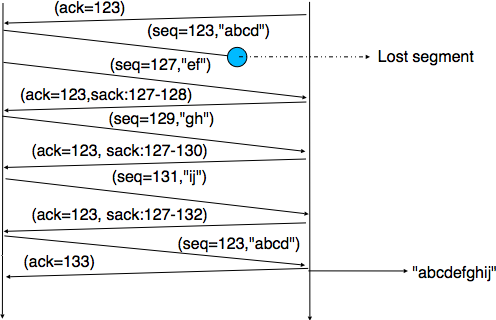

A selective repeat receiver discards all segments having an invalid CRC, and maintains the variable lastack as the sequence number of the last in-sequence segment that it has received. The receiver always includes the value of lastack in the acknowledgements that it sends. Some protocols also allow the selective repeat receiver to acknowledge the out-of-sequence segments that it has received. This can be done for example by placing the list of the sequence numbers of the correctly received, but out-of-sequence segments in the acknowledgements together with the lastack value.

When a selective repeat receiver receives a data segment, it first verifies whether the segment is inside its receiving window. If yes, the segment is placed in the receive buffer. If not, the received segment is discarded and an acknowledgement containing lastack is sent to the sender. The receiver then removes all consecutive segments starting at lastack (if any) from the receive buffer. The payloads of these segments are delivered to the user, lastack and the receiving window are updated, and an acknowledgement acknowledging the last segment received in sequence is sent.

The selective repeat sender maintains a sending buffer that can store up to W unacknowledged segments. These segments are sent as long as the sending buffer is not full. Several implementations of a selective repeat sender are possible. A simple implementation is to associate a retransmission timer to each segment. The timer is started when the segment is sent and cancelled upon reception of an acknowledgement that covers this segment. When a retransmission timer expires, the corresponding segment is retransmitted and this retransmission timer is restarted. When an acknowledgement is received, all the segments that are covered by this acknowledgement are removed from the sending buffer and the sliding window is updated.

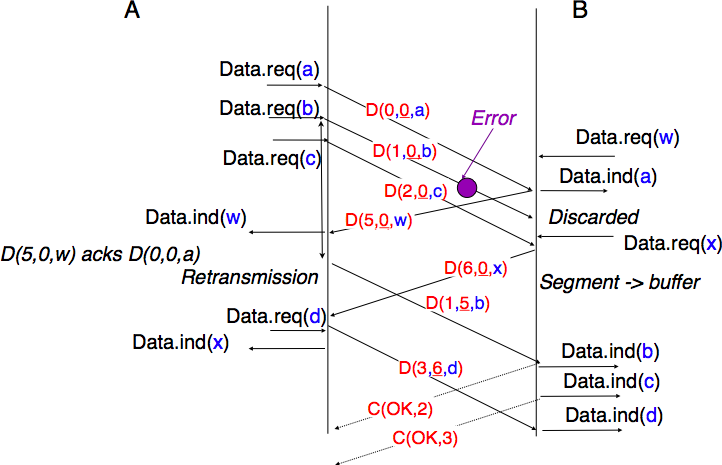

The figure below illustrates the operation of selective repeat when segments are lost. In this figure, C(OK,x) is used to indicate that all segments, up to and including sequence number x have been received correctly.

Pure cumulative acknowledgements work well with the go-back-n strategy. However, with only cumulative acknowledgements a selective repeat sender cannot easily determine which data segments have been correctly received after a data segment has been lost. For example, in the figure above, the second C(OK,0) does not inform explicitly the sender of the reception of D(2,c) and the sender could retransmit this segment although it has already been received. A possible solution to improve the performance of selective repeat is to provide additional information about the received segments in the acknowledgements that are returned by the receiver. For example, the receiver could add in the returned acknowledgement the list of the sequence numbers of all segments that have already been received. Such acknowledgements are sometimes called selective acknowledgements. This is illustrated in the figure below.

In the figure above, when the sender receives C(OK,0,[2]), it knows that all segments up to and including D(0,...) have been correctly received. It also knows that segment D(2,...) has been received and can cancel the retransmission timer associated to this segment. However, this segment should not be removed from the sending buffer before the reception of a cumulative acknowledgement (C(OK,2) in the figure above) that covers this segment.

Note

Maximum window size with go-back-n and selective repeat

A transport protocol that uses n bits to encode its sequence number can send up to  different segments. However, to ensure a reliable delivery of the segments, go-back-n and selective repeat cannot use a sending window of

different segments. However, to ensure a reliable delivery of the segments, go-back-n and selective repeat cannot use a sending window of  segments.

Consider first go-back-n and assume that a sender sends

segments.

Consider first go-back-n and assume that a sender sends  segments. These segments are received in-sequence by the destination, but all the returned acknowledgements are lost. The sender will retransmit all segments and they will all be accepted by the receiver and delivered a second time to the user. It is easy to see that this problem can be avoided if the maximum size of the sending window is

segments. These segments are received in-sequence by the destination, but all the returned acknowledgements are lost. The sender will retransmit all segments and they will all be accepted by the receiver and delivered a second time to the user. It is easy to see that this problem can be avoided if the maximum size of the sending window is  segments.

A similar problem occurs with selective repeat. However, as the receiver accepts out-of-sequence segments, a sending window of

segments.

A similar problem occurs with selective repeat. However, as the receiver accepts out-of-sequence segments, a sending window of  segments is not sufficient to ensure a reliable delivery of all segments. It can be easily shown that to avoid this problem, a selective repeat sender cannot use a window that is larger than

segments is not sufficient to ensure a reliable delivery of all segments. It can be easily shown that to avoid this problem, a selective repeat sender cannot use a window that is larger than  segments.

segments.

Go-back-n or selective repeat are used by transport protocols to provide a reliable data transfer above an unreliable network layer service. Until now, we have assumed that the size of the sliding window was fixed for the entire lifetime of the connection. In practice a transport layer entity is usually implemented in the operating system and shares memory with other parts of the system. Furthermore, a transport layer entity must support several (possibly hundreds or thousands) of transport connections at the same time. This implies that the memory which can be used to support the sending or the receiving buffer of a transport connection may change during the lifetime of the connection [4] . Thus, a transport protocol must allow the sender and the receiver to adjust their window sizes.

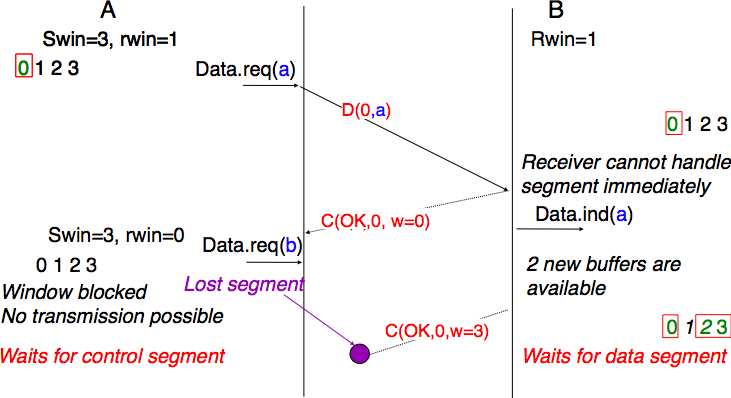

To deal with this issue, transport protocols allow the receiver to advertise the current size of its receiving window in all the acknowledgements that it sends. The receiving window advertised by the receiver bounds the size of the sending buffer used by the sender. In practice, the sender maintains two state variables : swin, the size of its sending window (that may be adjusted by the system) and rwin, the size of the receiving window advertised by the receiver. At any time, the number of unacknowledged segments cannot be larger than min(swin,rwin) [5] . The utilisation of dynamic windows is illustrated in the figure below.

The receiver may adjust its advertised receive window based on its current memory consumption, but also to limit the bandwidth used by the sender. In practice, the receive buffer can also shrink as the application may not able to process the received data quickly enough. In this case, the receive buffer may be completely full and the advertised receive window may shrink to 0. When the sender receives an acknowledgement with a receive window set to 0, it is blocked until it receives an acknowledgement with a positive receive window. Unfortunately, as shown in the figure below, the loss of this acknowledgement could cause a deadlock as the sender waits for an acknowledgement while the receiver is waiting for a data segment.

To solve this problem, transport protocols rely on a special timer : the persistence timer. This timer is started by the sender whenever it receives an acknowledgement advertising a receive window set to 0. When the timer expires, the sender retransmits an old segment in order to force the receiver to send a new acknowledgement, and hence send the current receive window size.

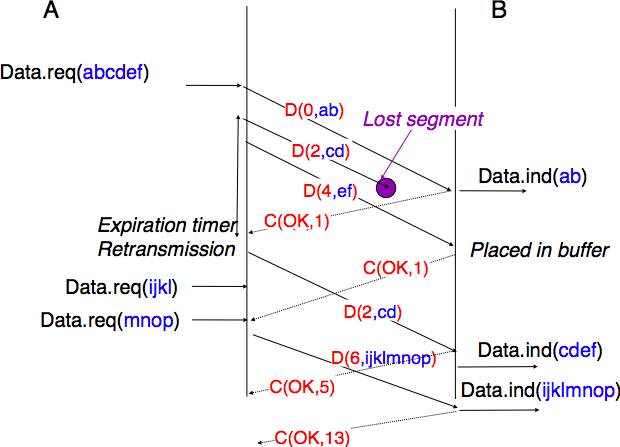

To conclude our description of the basic mechanisms found in transport protocols, we still need to discuss the impact of segments arriving in the wrong order. If two consecutive segments are reordered, the receiver relies on their sequence numbers to reorder them in its receive buffer. Unfortunately, as transport protocols reuse the same sequence number for different segments, if a segment is delayed for a prolonged period of time, it might still be accepted by the receiver. This is illustrated in the figure below where segment D(1,b) is delayed.

To deal with this problem, transport protocols combine two solutions. First, they use 32 bits or more to encode the sequence number in the segment header. This increases the overhead, but also increases the delay between the transmission of two different segments having the same sequence number. Second, transport protocols require the network layer to enforce a Maximum Segment Lifetime (MSL). The network layer must ensure that no packet remains in the network for more than MSL seconds. In the Internet the MSL is assumed [6] to be 2 minutes RFC 793. Note that this limits the maximum bandwidth of a transport protocol. If it uses n bits to encode its sequence numbers, then it cannot send more than  segments every MSL seconds.

segments every MSL seconds.

Transport protocols often need to send data in both directions. To reduce the overhead caused by the acknowledgements, most transport protocols use piggybacking. Thanks to this technique, a transport entity can place inside the header of the data segments that it sends, the acknowledgements and the receive window that it advertises for the opposite direction of the data flow. The main advantage of piggybacking is that it reduces the overhead as it is not necessary to send a complete segment to carry an acknowledgement. This is illustrated in the figure below where the acknowledgement number is underlined in the data segments. Piggybacking is only used when data flows in both directions. A receiver will generate a pure acknowledgement when it does not send data in the opposite direction as shown in the bottom of the figure.

The last point to be discussed about the data transfer mechanisms used by transport protocols is the provision of a byte stream service. As indicated in the first chapter, the byte stream service is widely used in the transport layer. The transport protocols that provide a byte stream service associate a sequence number to all the bytes that are sent and place the sequence number of the first byte of the segment in the segment’s header. This is illustrated in the figure below. In this example, the sender chooses to put two bytes in each of the first three segments. This is due to graphical reasons, a real transport protocol would use larger segments in practice. However, the division of the byte stream into segments combined with the losses and retransmissions explain why the byte stream service does not preserve the SDU boundaries.

Connection establishment and release¶

The last points to be discussed about the transport protocol are the mechanisms used to establish and release a transport connection.

We explained in the first chapters the service primitives used to establish a connection. The simplest approach to establish a transport connection would be to define two special control segments : CR and CA. The CR segment is sent by the transport entity that wishes to initiate a connection. If the remote entity wishes to accept the connection, it replies by sending a CA segment. The transport connection is considered to be established once the CA segment has been received and data segments can be sent in both directions.

Unfortunately, this scheme is not sufficient for several reasons. First, a transport entity usually needs to maintain several transport connections with remote entities. Sometimes, different users (i.e. processes) running above a given transport entity request the establishment of several transport connections to different users attached to the same remote transport entity. These different transport connections must be clearly separated to ensure that data from one connection is not passed to the other connections. This can be achieved by using a connection identifier, chosen by the transport entities and placed inside each segment to allow the entity which receives a segment to easily associate it to one established connection.

Second, as the network layer is imperfect, the CR or CA segment can be lost, delayed, or suffer from transmission errors. To deal with these problems, the control segments must be protected by using a CRC or checksum to detect transmission errors. Furthermore, since the CA segment acknowledges the reception of the CR segment, the CR segment can be protected by using a retransmission timer.

Unfortunately, this scheme is not sufficient to ensure the reliability of the transport service. Consider for example a short-lived transport connection where a single, but important transfer (e.g. money transfer from a bank account) is sent. Such a short-lived connection starts with a CR segment acknowledged by a CA segment, then the data segment is sent, acknowledged and the connection terminates. Unfortunately, as the network layer service is unreliable, delays combined to retransmissions may lead to the situation depicted in the figure below, where a delayed CR and data segments from a former connection are accepted by the receiving entity as valid segments, and the corresponding data is delivered to the user. Duplicating SDUs is not acceptable, and the transport protocol must solve this problem.

To avoid these duplicates, transport protocols require the network layer to bound the Maximum Segment Lifetime (MSL). The organisation of the network must guarantee that no segment remains in the network for longer than MSL seconds. On today’s Internet, MSL is expected to be 2 minutes. To avoid duplicate transport connections, transport protocol entities must be able to safely distinguish between a duplicate CR segment and a new CR segment, without forcing each transport entity to remember all the transport connections that it has established in the past.

A classical solution to avoid remembering the previous transport connections to detect duplicates is to use a clock inside each transport entity. This transport clock has the following characteristics :

- the transport clock is implemented as a k bits counter and its clock cycle is such that

. Furthermore, the transport clock counter is incremented every clock cycle and after each connection establishment. This clock is illustrated in the figure below.

- the transport clock must continue to be incremented even if the transport entity stops or reboots

It should be noted that transport clocks do not need and usually are not synchronised to the real-time clock. Precisely synchronising real-time clocks is an interesting problem, but it is outside the scope of this document. See [Mills2006] for a detailed discussion on synchronising the real-time clock.

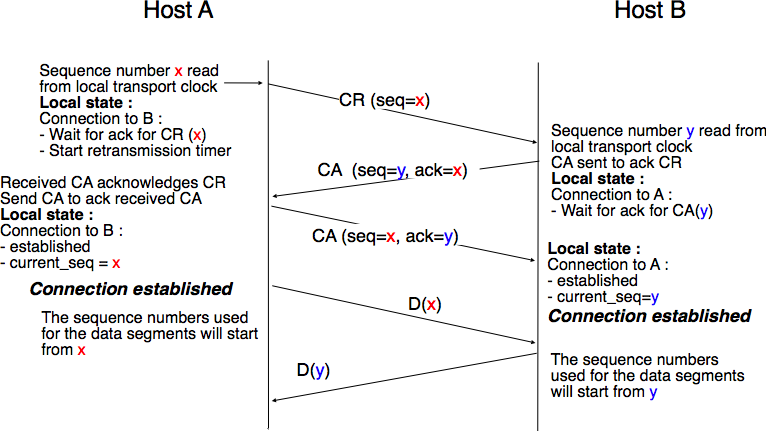

The transport clock is combined with an exchange of three segments, called the three way handshake, to detect duplicates. This three way handshake occurs as follows :

- The initiating transport entity sends a CR segment. This segment requests the establishment of a transport connection. It contains a connection identifier (not shown in the figure) and a sequence number (seq=x in the figure below) whose value is extracted from the transport clock . The transmission of the CR segment is protected by a retransmission timer.

- The remote transport entity processes the CR segment and creates state for the connection attempt. At this stage, the remote entity does not yet know whether this is a new connection attempt or a duplicate segment. It returns a CA segment that contains an acknowledgement number to confirm the reception of the CR segment (ack=x in the figure below) and a sequence number (seq=y in the figure below) whose value is extracted from its transport clock. At this stage, the connection is not yet established.

- The initiating entity receives the CA segment. The acknowledgement number of this segment confirms that the remote entity has correctly received the CA segment. The transport connection is considered to be established by the initiating entity and the numbering of the data segments starts at sequence number x. Before sending data segments, the initiating entity must acknowledge the received CA segments by sending another CA segment.

- The remote entity considers the transport connection to be established after having received the segment that acknowledges its CA segment. The numbering of the data segments sent by the remote entity starts at sequence number y.

The three way handshake is illustrated in the figure below.

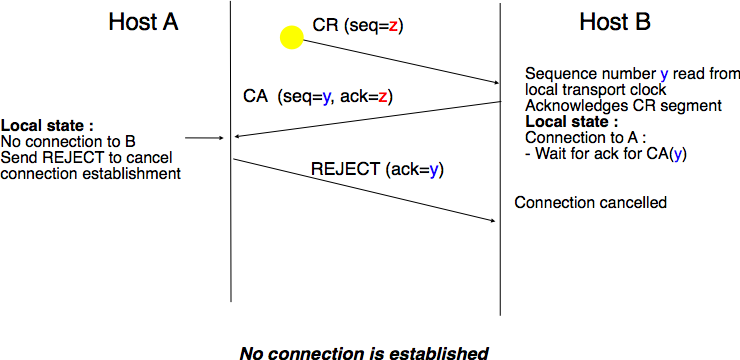

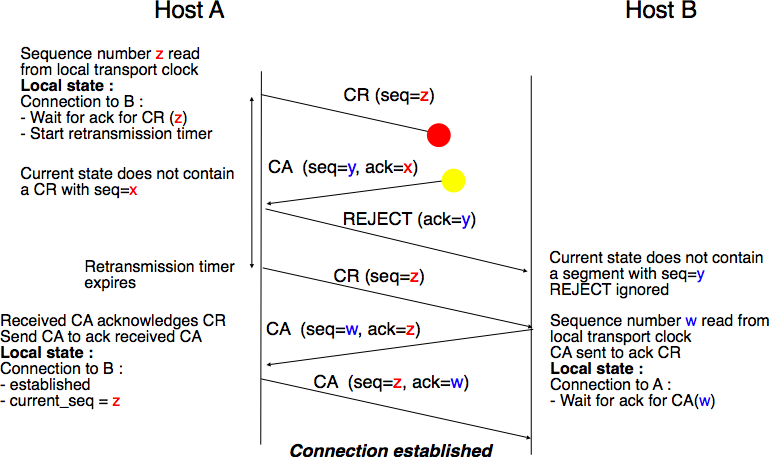

Thanks to the three way handshake, transport entities avoid duplicate transport connections. This is illustrated by the three scenarios below.

The first scenario is when the remote entity receives an old CR segment. It considers this CR segment as a connection establishment attempt and replies by sending a CA segment. However, the initiating host cannot match the received CA segment with a previous connection attempt. It sends a control segment (REJECT in the figure below) to cancel the spurious connection attempt. The remote entity cancels the connection attempt upon reception of this control segment.

A second scenario is when the initiating entity sends a CR segment that does not reach the remote entity and receives a duplicate CA segment from a previous connection attempt. This duplicate CA segment cannot contain a valid acknowledgement for the CR segment as the sequence number of the CR segment was extracted from the transport clock of the initiating entity. The CA segment is thus rejected and the CR segment is retransmitted upon expiration of the retransmission timer.

The last scenario is less likely, but it it important to consider it as well. The remote entity receives an old CR segment. It notes the connection attempt and acknowledges it by sending a CA segment. The initiating entity does not have a matching connection attempt and replies by sending a REJECT. Unfortunately, this segment never reaches the remote entity. Instead, the remote entity receives a retransmission of an older CA segment that contains the same sequence number as the first CR segment. This CA segment cannot be accepted by the remote entity as a confirmation of the transport connection as its acknowledgement number cannot have the same value as the sequence number of the first CA segment.

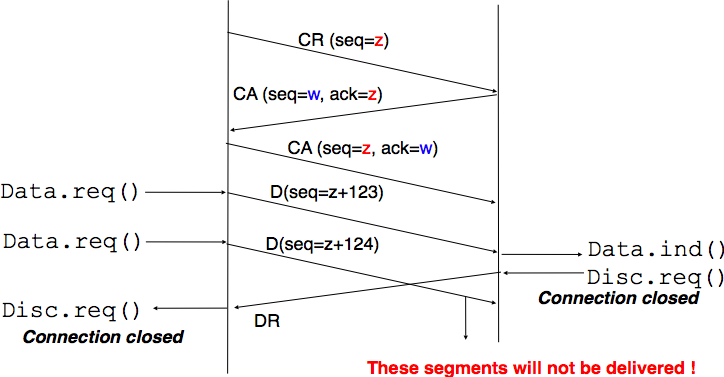

When we discussed the connection-oriented service, we mentioned that there are two types of connection releases : abrupt release and graceful release.

The first solution to release a transport connection is to define a new control segment (e.g. the DR segment) and consider the connection to be released once this segment has been sent or received. This is illustrated in the figure below.

As the entity that sends the DR segment cannot know whether the other entity has already sent all its data on the connection, SDUs can be lost during such an abrupt connection release.

The second method to release a transport connection is to release independently the two directions of data transfer. Once a user of the transport service has sent all its SDUs, it performs a DISCONNECT.req for its direction of data transfer. The transport entity sends a control segment to request the release of the connection after the delivery of all previous SDUs to the remote user. This is usually done by placing in the DR the next sequence number and by delivering the DISCONNECT.ind only after all previous DATA.ind. The remote entity confirms the reception of the DR segment and the release of the corresponding direction of data transfer by returning an acknowledgement. This is illustrated in the figure below.

Footnotes

| [2] | In the application layer, most servers are implemented as processes. The network and transport layer on the other hand are usually implemented inside the operating system and the amount of memory that they can use is limited by the amount of memory allocated to the entire kernel. |

| [3] | The size of the sliding window can be either fixed for a given protocol or negotiated during the connection establishment phase. We’ll see later that it is also possible to change the size of the sliding window during the connection’s lifetime. |

| [4] | For a discussion on how the sending buffer can change, see e.g. [SMM1998] |

| [5] | Note that if the receive window shrinks, it might happen that the sender has already sent a segment that is not anymore inside its window. This segment will be discarded by the receiver and the sender will retransmit it later. |

| [6] | As we will see in the next chapter, the Internet does not strictly enforce this MSL. However, it is reasonable to expect that most packets on the Internet will not remain in the network during more than 2 minutes. There are a few exceptions to this rule, such as RFC 1149 whose implementation is described in http://www.blug.linux.no/rfc1149/ but there are few real links supporting RFC 1149 in the Internet. |

The User Datagram Protocol¶

The User Datagram Protocol (UDP) is defined in RFC 768. It provides an unreliable connectionless transport service on top of the unreliable network layer connectionless service. The main characteristics of the UDP service are :

- the UDP service cannot deliver SDUs that are larger than 65507 bytes [7]

- the UDP service does not guarantee the delivery of SDUs (losses and desquencing can occur)

- the UDP service will not deliver a corrupted SDU to the destination

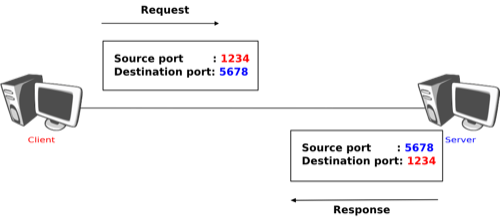

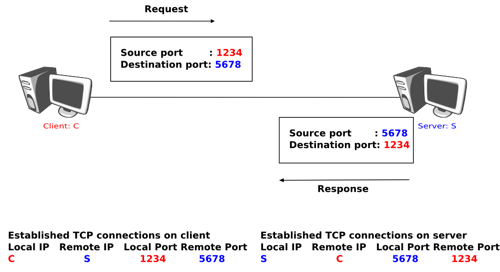

Compared to the connectionless network layer service, the main advantage of the UDP service is that it allows several applications running on a host to exchange SDUs with several other applications running on remote hosts. Let us consider two hosts, e.g. a client and a server. The network layer service allows the client to send information to the server, but if an application running on the client wants to contact a particular application running on the server, then an additional addressing mechanism is required other than the IP address that identifies a host, in order to differentiate the application running on a host. This additional addressing is provided by port numbers. When a server using UDP is enabled on a host, this server registers a port number. This port number will be used by the clients to contact the server process via UDP.

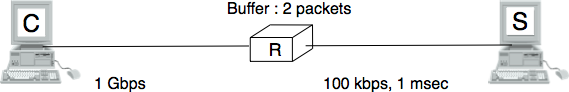

The figure below shows a typical usage of the UDP port numbers. The client process uses port number 1234 while the server process uses port number 5678. When the client sends a request, it is identified as originating from port number 1234 on the client host and destined to port number 5678 on the server host. When the server process replies to this request, the server’s UDP implementation will send the reply as originating from port 5678 on the server host and destined to port 1234 on the client host.

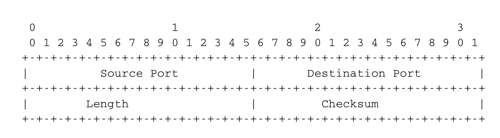

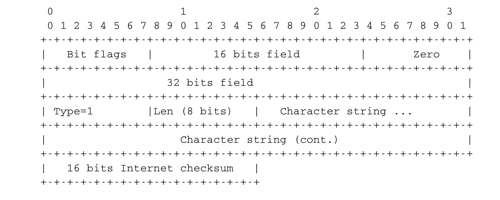

UDP uses a single segment format shown in the figure below.

The UDP header contains four fields :

- a 16 bits source port

- a 16 bits destination port

- a 16 bits length field

- a 16 bits checksum

As the port numbers are encoded as a 16 bits field, there can be up to only 65535 different server processes that are bound to a different UDP port at the same time on a given server. In practice, this limit is never reached. However, it is worth noticing that most implementations divide the range of allowed UDP port numbers into three different ranges :

- the privileged port numbers (1 < port < 1024 )

- the ephemeral port numbers ( officially [9] 49152 <= port <= 65535 )

- the registered port numbers (officially 1024 <= port < 49152)

In most Unix variants, only processes having system administrator privileges can be bound to port numbers smaller than 1024. Well-known servers such as DNS, NTP or RPC use privileged port numbers. When a client needs to use UDP, it usually does not require a specific port number. In this case, the UDP implementation will allocate the first available port number in the ephemeral range. The range of registered port numbers should be used by servers. In theory, developers of network servers should register their port number officially through IANA, but few developers do this.

Note

Computation of the UDP checksum

The checksum of the UDP segment is computed over :

- a pseudo header containing the source IP address, the destination IP address and a 32 bits bit field containing the most significant byte set to 0, the second set to 17 and the length of the UDP segment in the lower two bytes

- the entire UDP segment, including its header

This pseudo-header allows the receiver to detect errors affecting the IP source or destination addresses placed in the IP layer below. This is a violation of the layering principle that dates from the time when UDP and IP were elements of a single protocol. It should be noted that if the checksum algorithm computes value ‘0x0000’, then value ‘0xffff’ is transmitted. A UDP segment whose checksum is set to ‘0x0000’ is a segment for which the transmitter did not compute a checksum upon transmission. Some NFS servers chose to disable UDP checksums for performance reasons, but this caused problems that were difficult to diagnose. In practice, there are rarely good reasons to disable UDP checksums. A detailed discussion of the implementation of the Internet checksum may be found in RFC 1071

Several types of applications rely on UDP. As a rule of thumb, UDP is used for applications where delay must be minimised or losses can be recovered by the application itself. A first class of the UDP-based applications are applications where the client sends a short request and expects a quick and short answer. The DNS is an example of a UDP application that is often used in the wide area. However, in local area networks, many distributed systems rely on Remote Procedure Call (RPC) that is often used on top of UDP. In Unix environments, the Network File System (NFS) is built on top of RPC and runs frequently on top of UDP. A second class of UDP-based applications are the interactive computer games that need to frequently exchange small messages, such as the player’s location or their recent actions. Many of these games use UDP to minimise the delay and can recover from losses. A third class of applications are multimedia applications such as interactive Voice over IP or interactive Video over IP. These interactive applications expect a delay shorter than about 200 milliseconds between the sender and the receiver and can recover from losses directly inside the application.

Footnotes

| [7] | This limitation is due to the fact that the network layer (IPv4 and IPv6) cannot transport packets that are larger than 64 KBytes. As UDP does not include any segmentation/reassembly mechanism, it cannot split a SDU before sending it. |

| [8] | The complete list of allocated port numbers is maintained by IANA . It may be downloaded from http://www.iana.org/assignments/port-numbers |

| [9] | A discussion of the ephemeral port ranges used by different TCP/UDP implementations may be found in http://www.ncftp.com/ncftpd/doc/misc/ephemeral_ports.html |

The Transmission Control Protocol¶

The Transmission Control Protocol (TCP) was initially defined in RFC 793. Several parts of the protocol have been improved since the publication of the original protocol specification [10]. However, the basics of the protocol remain and an implementation that only supports RFC 793 should inter-operate with today’s implementation.

TCP provides a reliable bytestream, connection-oriented transport service on top of the unreliable connectionless network service provided by IP. TCP is used by a large number of applications, including :

On the global Internet, most of the applications used in the wide area rely on TCP. Many studies [11] have reported that TCP was responsible for more than 90% of the data exchanged in the global Internet.

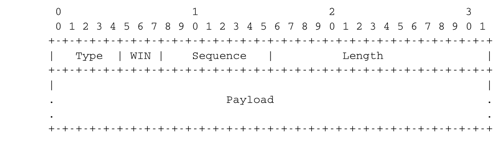

To provide this service, TCP relies on a simple segment format that is shown in the figure below. Each TCP segment contains a header described below and, optionally, a payload. The default length of the TCP header is twenty bytes, but some TCP headers contain options.

A TCP header contains the following fields :

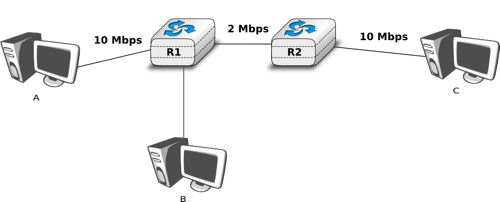

Source and destination ports. The source and destination ports play an important role in TCP, as they allow the identification of the connection to which a TCP segment belongs. When a client opens a TCP connection, it typically selects an ephemeral TCP port number as its source port and contacts the server by using the server’s port number. All the segments that are sent by the client on this connection have the same source and destination ports. The server sends segments that contain as source (resp. destination port, the destination (resp. source) port of the segments sent by the client (see figure Utilization of the TCP source and destination ports). A TCP connection is always identified by five pieces of information :

- the IP address of the client

- the IP address of the server

- the port chosen by the client

- the port chosen by the server

- TCP

the sequence number (32 bits), acknowledgement number (32 bits) and window (16 bits) fields are used to provide a reliable data transfer, using a window-based protocol. In a TCP bytestream, each byte of the stream consumes one sequence number. Their utilisation will be described in more detail in section TCP reliable data transfer

the Urgent pointer is used to indicate that some data should be considered as urgent in a TCP bytestream. However, it is rarely used in practice and will not be described here. Additional details about the utilisation of this pointer may be found in RFC 793, RFC 1122 or [Stevens1994]

the flags field contains a set of bit flags that indicate how a segment should be interpreted by the TCP entity receiving it :

- the SYN flag is used during connection establishment

- the FIN flag is used during connection release

- the RST is used in case of problems or when an invalid segment has been received

- when the ACK flag is set, it indicates that the acknowledgment field contains a valid number. Otherwise, the content of the acknowledgment field must be ignored by the receiver

- the URG flag is used together with the Urgent pointer

- the PSH flag is used as a notification from the sender to indicate to the receiver that it should pass all the data it has received to the receiving process. However, in practice TCP implementations do not allow TCP users to indicate when the PSH flag should be set and thus there are few real utilizations of this flag.

the checksum field contains the value of the Internet checksum computed over the entire TCP segment and a pseudo-header as with UDP

the Reserved field was initially reserved for future utilization. It is now used by RFC 3168.

the TCP Header Length (THL) or Data Offset field is a four bits field that indicates the size of the TCP header in 32 bit words. The maximum size of the TCP header is thus 64 bytes.

the Optional header extension is used to add optional information to the TCP header. Thanks to this header extension, it is possible to add new fields to the TCP header that were not planned in the original specification. This allowed TCP to evolve since the early eighties. The details of the TCP header extension are explained in sections TCP connection establishment and TCP reliable data transfer.

The rest of this section is organised as follows. We first explain the establishment and the release of a TCP connection, then we discuss the mechanisms that are used by TCP to provide a reliable bytestream service. We end the section with a discussion of network congestion and explain the mechanisms that TCP uses to avoid congestion collapse.

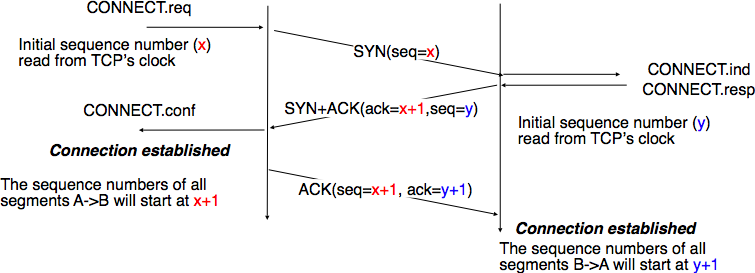

TCP connection establishment¶

A TCP connection is established by using a three-way handshake. The connection establishment phase uses the sequence number, the acknowledgment number and the SYN flag. When a TCP connection is established, the two communicating hosts negotiate the initial sequence number to be used in both directions of the connection. For this, each TCP entity maintains a 32 bits counter, which is supposed to be incremented by one at least every 4 microseconds and after each connection establishment [12]. When a client host wants to open a TCP connection with a server host, it creates a TCP segment with :

- the SYN flag set

- the sequence number set to the current value of the 32 bits counter of the client host’s TCP entity

Upon reception of this segment (which is often called a SYN segment), the server host replies with a segment containing :

- the SYN flag set

- the sequence number set to the current value of the 32 bits counter of the server host’s TCP entity

- the ACK flag set

- the acknowledgment number set to the sequence number of the received SYN segment incremented by 1 (

). When a TCP entity sends a segment having x+1 as acknowledgment number, this indicates that it has received all data up to and including sequence number x and that it is expecting data having sequence number x+1. As the SYN flag was set in a segment having sequence number x, this implies that setting the SYN flag in a segment consumes one sequence number.

This segment is often called a SYN+ACK segment. The acknowledgment confirms to the client that the server has correctly received the SYN segment. The sequence number of the SYN+ACK segment is used by the server host to verify that the client has received the segment. Upon reception of the SYN+ACK segment, the client host replies with a segment containing :

- the ACK flag set

- the acknowledgment number set to the sequence number of the received SYN+ACK segment incremented by 1 (

)

At this point, the TCP connection is open and both the client and the server are allowed to send TCP segments containing data. This is illustrated in the figure below.

In the figure above, the connection is considered to be established by the client once it has received the SYN+ACK segment, while the server considers the connection to be established upon reception of the ACK segment. The first data segment sent by the client (server) has its sequence number set to x+1 (resp. y+1).

Note

Computing TCP’s initial sequence number

In the original TCP specification RFC 793, each TCP entity maintained a clock to compute the initial sequence number (ISN) placed in the SYN and SYN+ACK segments. This made the ISN predictable and caused a security issue. The typical security problem was the following. Consider a server that trusts a host based on its IP address and allows the system administrator to login from this host without giving a password [13]. Consider now an attacker who knows this particular configuration and is able to send IP packets having the client’s address as source. He can send fake TCP segments to the server, but does not receive the server’s answers. If he can predict the ISN that is chosen by the server, he can send a fake SYN segment and shortly after the fake ACK segment confirming the reception of the SYN+ACK segment sent by the server. Once the TCP connection is open, he can use it to send any command to the server. To counter this attack, current TCP implementations add randomness to the ISN. One of the solutions, proposed in RFC 1948 is to compute the ISN as

ISN = M + H(localhost, localport, remotehost, remoteport, secret).

where M is the current value of the TCP clock and H`is a cryptographic hash function. `localhost and remotehost (resp. localport and remoteport ) are the IP addresses (port numbers) of the local and remote host and secret is a random number only known by the server. This method allows the server to use different ISNs for different clients at the same time. Measurements performed with the first implementations of this technique showed that it was difficult to implement it correctly, but today’s TCP implementation now generate good ISNs.

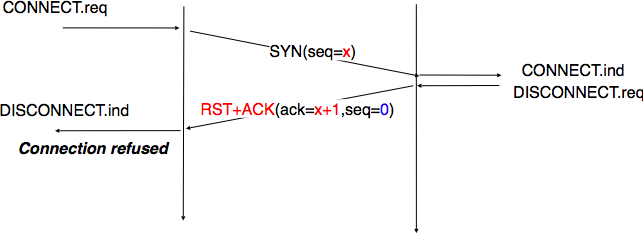

A server could, of course, refuse to open a TCP connection upon reception of a SYN segment. This refusal may be due to various reasons. There may be no server process that is listening on the destination port of the SYN segment. The server could always refuse connection establishments from this particular client (e.g. due to security reasons) or the server may not have enough resources to accept a new TCP connection at that time. In this case, the server would reply with a TCP segment having its RST flag set and containing the sequence number of the received SYN segment as its acknowledgment number. This is illustrated in the figure below. We discuss the other utilizations of the TCP RST flag later (see TCP connection release).

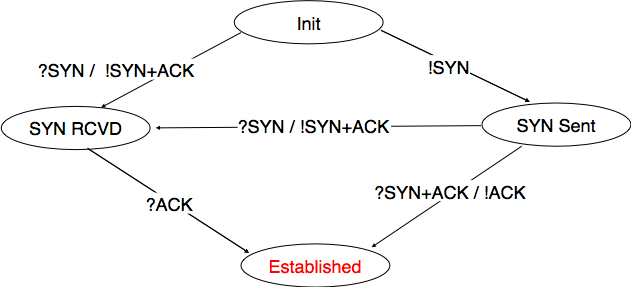

TCP connection establishment can be described as the four state Finite State Machine shown below. In this FSM, !X (resp. ?Y) indicates the transmission of segment X (resp. reception of segment Y) during the corresponding transition. Init is the initial state.

A client host starts in the Init state. It then sends a SYN segment and enters the SYN Sent state where it waits for a SYN+ACK segment. Then, it replies with an ACK segment and enters the Established state where data can be exchanged. On the other hand, a server host starts in the Init state. When a server process starts to listen to a destination port, the underlying TCP entity creates a TCP control block and a queue to process incoming SYN segments. Upon reception of a SYN segment, the server’s TCP entity replies with a SYN+ACK and enters the SYN RCVD state. It remains in this state until it receives an ACK segment that acknowledges its SYN+ACK segment, with this it then enters the Established state.

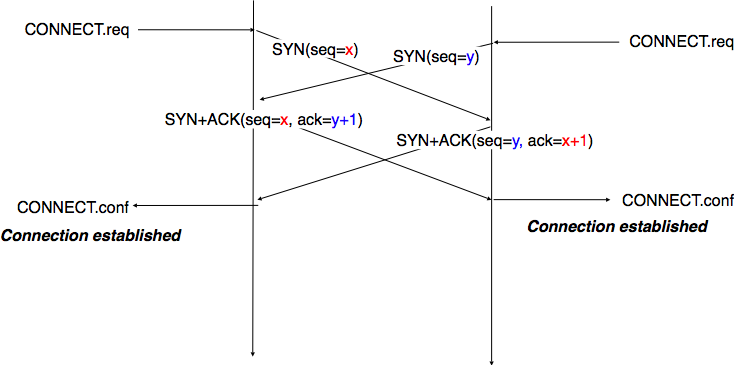

Apart from these two paths in the TCP connection establishment FSM, there is a third path that corresponds to the case when both the client and the server send a SYN segment to open a TCP connection [14]. In this case, the client and the server send a SYN segment and enter the SYN Sent state. Upon reception of the SYN segment sent by the other host, they reply by sending a SYN+ACK segment and enter the SYN RCVD state. The SYN+ACK that arrives from the other host allows it to transition to the Established state. The figure below illustrates such a simultaneous establishment of a TCP connection.

Denial of Service attacks

When a TCP entity opens a TCP connection, it creates a Transmission Control Block (TCB). The TCB contains the entire state that is maintained by the TCP entity for each TCP connection. During connection establishment, the TCB contains the local IP address, the remote IP address, the local port number, the remote port number, the current local sequence number, the last sequence number received from the remote entity. Until the mid 1990s, TCP implementations had a limit on the number of TCP connections that could be in the SYN RCVD state at a given time. Many implementations set this limit to about 100 TCBs. This limit was considered sufficient even for heavily load http servers given the small delay between the reception of a SYN segment and the reception of the ACK segment that terminates the establishment of the TCP connection. When the limit of 100 TCBs in the SYN Rcvd state is reached, the TCP entity discards all received TCP SYN segments that do not correspond to an existing TCB.

This limit of 100 TCBs in the SYN Rcvd state was chosen to protect the TCP entity from the risk of overloading its memory with too many TCBs in the SYN Rcvd state. However, it was also the reason for a new type of Denial of Service (DoS) attack RFC 4987. A DoS attack is defined as an attack where an attacker can render a resource unavailable in the network. For example, an attacker may cause a DoS attack on a 2 Mbps link used by a company by sending more than 2 Mbps of packets through this link. In this case, the DoS attack was more subtle. As a TCP entity discards all received SYN segments as soon as it has 100 TCBs in the SYN Rcvd state, an attacker simply had to send a few 100 SYN segments every second to a server and never reply to the received SYN+ACK segments. To avoid being caught, attackers were of course sending these SYN segments with a different address than their own IP address [15]. On most TCP implementations, once a TCB entered the SYN Rcvd state, it remained in this state for several seconds, waiting for a retransmission of the initial SYN segment. This attack was later called a SYN flood attack and the servers of the ISP named panix were among the first to be affected by this attack.

To avoid the SYN flood attacks, recent TCP implementations no longer enter the SYN Rcvd state upon reception of a SYN segment. Instead, they reply directly with a SYN+ACK segment and wait until the reception of a valid ACK. This implementation trick is only possible if the TCP implementation is able to verify that the received ACK segment acknowledges the SYN+ACK segment sent earlier without storing the initial sequence number of this SYN+ACK segment in a TCB. The solution to solve this problem, which is known as SYN cookies is to compute the 32 bits of the ISN as follows :

- the high order bits contain the low order bits of a counter that is incremented slowly

- the low order bits contain a hash value computed over the local and remote IP addresses and ports and a random secret only known to the server

The advantage of the SYN cookies is that by using them, the server does not need to create a TCB upon reception of the SYN segment and can still check the returned ACK segment by recomputing the SYN cookie. The main disadvantage is that they are not fully compatible with the TCP options. This is why they are not enabled by default on a typical system.

Retransmitting the first SYN segment

As IP provides an unreliable connectionless service, the SYN and SYN+ACK segments sent to open a TCP connection could be lost. Current TCP implementations start a retransmission timer when they send the first SYN segment. This timer is often set to three seconds for the first retransmission and then doubles after each retransmission RFC 2988. TCP implementations also enforce a maximum number of retransmissions for the initial SYN segment.

As explained earlier, TCP segments may contain an optional header extension. In the SYN and SYN+ACK segments, these options are used to negotiate some parameters and the utilisation of extensions to the basic TCP specification.

The first parameter which is negotiated during the establishment of a TCP connection is the Maximum Segment Size (MSS). The MSS is the size of the largest segment that a TCP entity is able to process. According to RFC 879, all TCP implementations must be able to receive TCP segments containing 536 bytes of payload. However, most TCP implementations are able to process larger segments. Such TCP implementations use the TCP MSS Option in the SYN/SYN+ACK segment to indicate the largest segment they are able to process. The MSS value indicates the maximum size of the payload of the TCP segments. The client (resp. server) stores in its TCB the MSS value announced by the server (resp. the client).

Another utilisation of TCP options during connection establishment is to enable TCP extensions. For example, consider RFC 1323 (which is discussed in TCP reliable data transfer). RFC 1323 defines TCP extensions to support timestamps and larger windows. If the client supports RFC 1323, it adds a RFC 1323 option to its SYN segment. If the server understands this RFC 1323 option and wishes to use it, it replies with an RFC 1323 option in the SYN+ACK segment and the extension defined in RFC 1323 is used throughout the TCP connection. Otherwise, if the server’s SYN+ACK does not contain the RFC 1323 option, the client is not allowed to use this extension and the corresponding TCP header options throughout the TCP connection. TCP’s option mechanism is flexible and it allows the extension of TCP while maintaining compatibility with older implementations.

The TCP options are encoded by using a Type Length Value format where :

- the first byte indicates the type of the option.

- the second byte indicates the total length of the option (including the first two bytes) in bytes

- the last bytes are specific for each type of option

RFC 793 defines the Maximum Segment Size (MSS) TCP option that must be understood by all TCP implementations. This option (type 2) has a length of 4 bytes and contains a 16 bits word that indicates the MSS supported by the sender of the SYN segment. The MSS option can only be used in TCP segments having the SYN flag set.

RFC 793 also defines two special options that must be supported by all TCP implementations. The first option is End of option. It is encoded as a single byte having value 0x00 and can be used to ensure that the TCP header extension ends on a 32 bits boundary. The No-Operation option, encoded as a single byte having value 0x01, can be used when the TCP header extension contains several TCP options that should be aligned on 32 bit boundaries. All other options [16] are encoded by using the TLV format.

Note

The robustness principle

The handling of the TCP options by TCP implementations is one of the many applications of the robustness principle which is usually attributed to Jon Postel and is often quoted as “Be liberal in what you accept, and conservative in what you send” RFC 1122

Concerning the TCP options, the robustness principle implies that a TCP implementation should be able to accept TCP options that it does not understand, in particular in received SYN segments, and that it should be able to parse any received segment without crashing, even if the segment contains an unknown TCP option. Furthermore, a server should not send in the SYN+ACK segment or later, options that have not been proposed by the client in the SYN segment.

TCP connection release¶

TCP, like most connection-oriented transport protocols, supports two types of connection release :

- graceful connection release, where each TCP user can release its own direction of data transfer

- abrupt connection release, where either one user closes both directions of data transfer or one TCP entity is forced to close the connection (e.g. because the remote host does not reply anymore or due to lack of resources)

The abrupt connection release mechanism is very simple and relies on a single segment having the RST bit set. A TCP segment containing the RST bit can be sent for the following reasons :

- a non-SYN segment was received for a non-existing TCP connection RFC 793

- by extension, some implementations respond with an RST segment to a segment that is received on an existing connection but with an invalid header RFC 3360. This causes the corresponding connection to be closed and has caused security attacks RFC 4953

- by extension, some implementations send an RST segment when they need to close an existing TCP connection (e.g. because there are not enough resources to support this connection or because the remote host is considered to be unreachable). Measurements have shown that this usage of TCP RST was widespread [AW05]

When an RST segment is sent by a TCP entity, it should contain the current value of the sequence number for the connection (or 0 if it does not belong to any existing connection) and the acknowledgement number should be set to the next expected in-sequence sequence number on this connection.

Note

TCP RST wars

TCP implementers should ensure that two TCP entities never enter a TCP RST war where host A is sending a RST segment in response to a previous RST segment that was sent by host B in response to a TCP RST segment sent by host A ... To avoid such an infinite exchange of RST segments that do not carry data, a TCP entity is never allowed to send a RST segment in response to another RST segment.

The normal way of terminating a TCP connection is by using the graceful TCP connection release. This mechanism uses the FIN flag of the TCP header and allows each host to release its own direction of data transfer. As for the SYN flag, the utilisation of the FIN flag in the TCP header consumes one sequence number. The figure FSM for TCP connection release shows the part of the TCP FSM used when a TCP connection is released.

Starting from the Established state, there are two main paths through this FSM.

The first path is when the host receives a segment with sequence number x and the FIN flag set. The utilisation of the FIN flag indicates that the byte before sequence number x was the last byte of the byte stream sent by the remote host. Once all of the data has been delivered to the user, the TCP entity sends an ACK segment whose ack field is set to  to acknowledge the FIN segment. The FIN segment is subject to the same retransmission mechanisms as a normal TCP segment. In particular, its transmission is protected by the retransmission timer. At this point, the TCP connection enters the CLOSE_WAIT state. In this state, the host can still send data to the remote host. Once all its data have been sent, it sends a FIN segment and enter the LAST_ACK state. In this state, the TCP entity waits for the acknowledgement of its FIN segment. It may still retransmit unacknowledged data segments e.g. if the retransmission timer expires. Upon reception of the acknowledgement for the FIN segment, the TCP connection is completely closed and its TCB can be discarded.

to acknowledge the FIN segment. The FIN segment is subject to the same retransmission mechanisms as a normal TCP segment. In particular, its transmission is protected by the retransmission timer. At this point, the TCP connection enters the CLOSE_WAIT state. In this state, the host can still send data to the remote host. Once all its data have been sent, it sends a FIN segment and enter the LAST_ACK state. In this state, the TCP entity waits for the acknowledgement of its FIN segment. It may still retransmit unacknowledged data segments e.g. if the retransmission timer expires. Upon reception of the acknowledgement for the FIN segment, the TCP connection is completely closed and its TCB can be discarded.

The second path is when the host decides first to send a FIN segment. In this case, it enters the FIN_WAIT1 state. It this state, it can retransmit unacknowledged segments but cannot send new data segments. It waits for an acknowledgement of its FIN segment, but may receive a FIN segment sent by the remote host. In the first case, the TCP connection enters the FIN_WAIT2 state. In this state, new data segments from the remote host are still accepted until the reception of the FIN segment. The acknowledgement for this FIN segment is sent once all data received before the FIN segment have been delivered to the user and the connection enters the TIME_WAIT state. In the second case, a FIN segment is received and the connection enters the Closing state once all data received from the remote host have been delivered to the user. In this state, no new data segments can be sent and the host waits for an acknowledgement of its FIN segment before entering the TIME_WAIT state.

The TIME_WAIT state is different from the other states of the TCP FSM. A TCP entity enters this state after having sent the last ACK segment on a TCP connection. This segment indicates to the remote host that all the data that it has sent have been correctly received and that it can safely release the TCP connection and discard the corresponding TCB. After having sent the last ACK segment, a TCP connection enters the TIME_WAIT and remains in this state for  seconds. During this period, the TCB of the connection is maintained. This ensures that the TCP entity that sent the last ACK maintains enough state to be able to retransmit this segment if this ACK segment is lost and the remote host retransmits its last FIN segment or another one. The delay of

seconds. During this period, the TCB of the connection is maintained. This ensures that the TCP entity that sent the last ACK maintains enough state to be able to retransmit this segment if this ACK segment is lost and the remote host retransmits its last FIN segment or another one. The delay of  seconds ensures that any duplicate segments on the connection would be handled correctly without causing the transmission of an RST segment. Without the TIME_WAIT state and the

seconds ensures that any duplicate segments on the connection would be handled correctly without causing the transmission of an RST segment. Without the TIME_WAIT state and the  seconds delay, the connection release would not be graceful when the last ACK segment is lost.

seconds delay, the connection release would not be graceful when the last ACK segment is lost.

Note

TIME_WAIT on busy TCP servers

The  seconds delay in the TIME_WAIT state is an important operational problem on servers having thousands of simultaneously opened TCP connections [FTY99]. Consider for example a busy web server that processes 10.000 TCP connections every second. If each of these connections remain in the TIME_WAIT state for 4 minutes, this implies that the server would have to maintain more than 2 million TCBs at any time. For this reason, some TCP implementations prefer to perform an abrupt connection release by sending a RST segment to close the connection [AW05] and immediately discard the corresponding TCB. However, if the RST segment is lost, the remote host continues to maintain a TCB for a connection no longer exists. This optimisation reduces the number of TCBs maintained by the host sending the RST segment but at the potential cost of increased processing on the remote host when the RST segment is lost.

seconds delay in the TIME_WAIT state is an important operational problem on servers having thousands of simultaneously opened TCP connections [FTY99]. Consider for example a busy web server that processes 10.000 TCP connections every second. If each of these connections remain in the TIME_WAIT state for 4 minutes, this implies that the server would have to maintain more than 2 million TCBs at any time. For this reason, some TCP implementations prefer to perform an abrupt connection release by sending a RST segment to close the connection [AW05] and immediately discard the corresponding TCB. However, if the RST segment is lost, the remote host continues to maintain a TCB for a connection no longer exists. This optimisation reduces the number of TCBs maintained by the host sending the RST segment but at the potential cost of increased processing on the remote host when the RST segment is lost.

TCP reliable data transfer¶

The original TCP data transfer mechanisms were defined in RFC 793. Based on the experience of using TCP on the growing global Internet, this part of the TCP specification has been updated and improved several times, always while preserving the backward compatibility with older TCP implementations. In this section, we review the main data transfer mechanisms used by TCP.

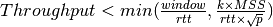

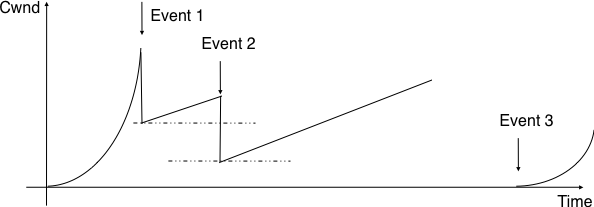

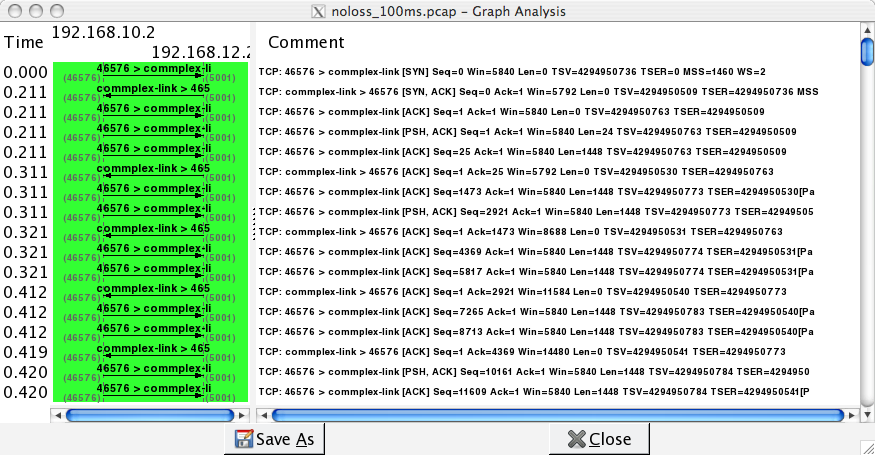

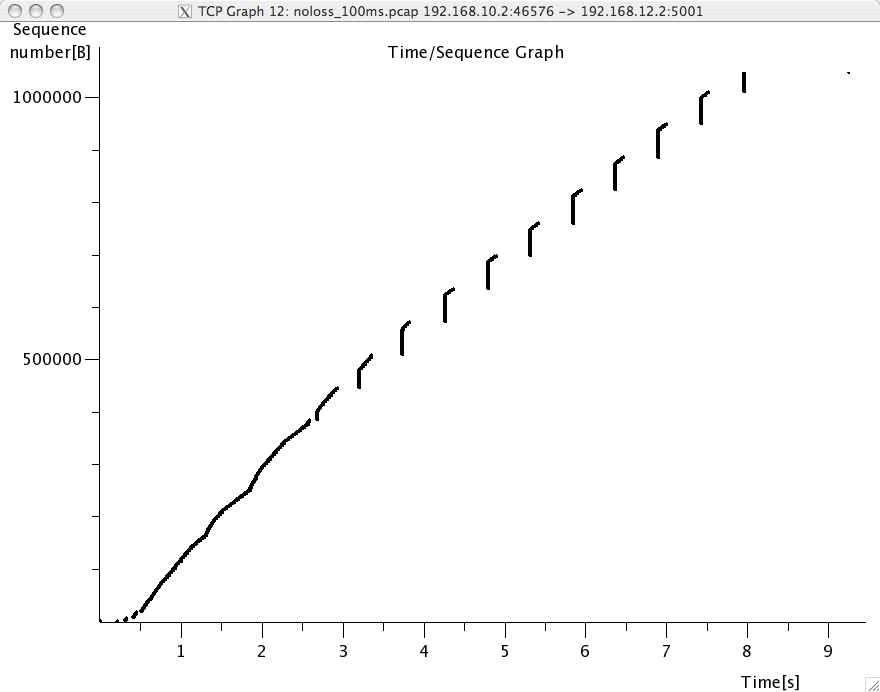

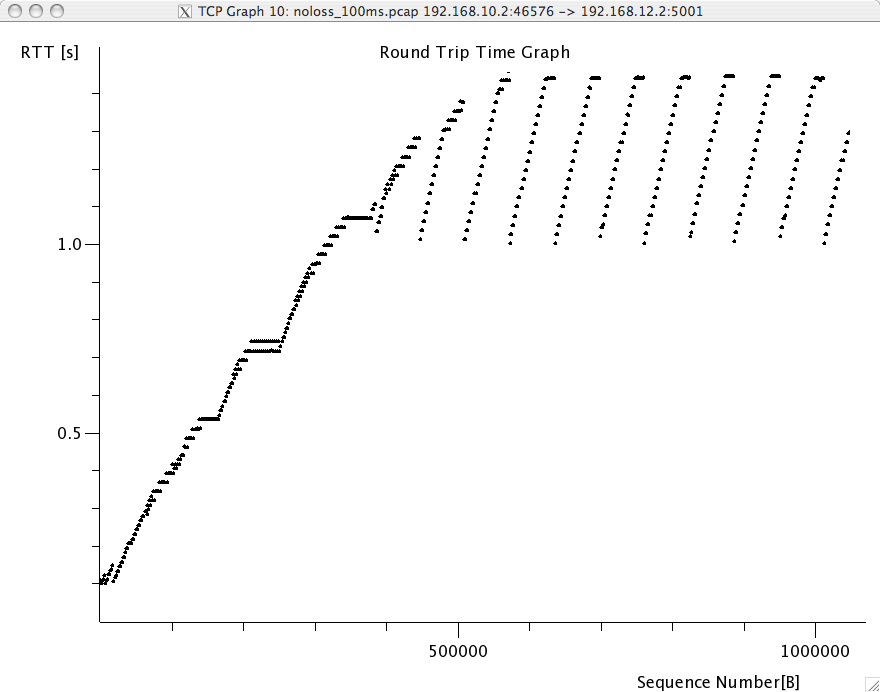

TCP is a window-based transport protocol that provides a bi-directional byte stream service. This has several implications on the fields of the TCP header and the mechanisms used by TCP. The three fields of the TCP header are :

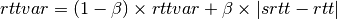

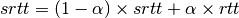

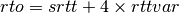

- sequence number. TCP uses a 32 bits sequence number. The sequence number placed in the header of a TCP segment containing data is the sequence number of the first byte of the payload of the TCP segment.